Inverse transformation

| ← Back: Combinations of transformations | Overview: Transformations | Next: Three-Angle Representations → |

Let  be a general homogeneous transformation matrix. The inverse transformation

be a general homogeneous transformation matrix. The inverse transformation  corresponds to the transformation that reverts the rotation and translation effected by

corresponds to the transformation that reverts the rotation and translation effected by  . If a vector is pre-multiplied by

. If a vector is pre-multiplied by  and subsequently pre-multiplied by

and subsequently pre-multiplied by  , this results in the original coordinates because

, this results in the original coordinates because  and multiplication with the identity matrix does not change anything (see transformations).

and multiplication with the identity matrix does not change anything (see transformations).

The general homogeneous transformation matrix  for three-dimensional space consists of a 3-by-3 rotation matrix

for three-dimensional space consists of a 3-by-3 rotation matrix  and a 3-by-1 translation vector

and a 3-by-1 translation vector  combined with the last row of the identity matrix:

combined with the last row of the identity matrix:

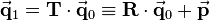

As stated in the article about homogeneous coordinates, multiplication with  is equivalent in cartesian coordinates to applying the rotation matrix

is equivalent in cartesian coordinates to applying the rotation matrix  first and then translating the coordinates by

first and then translating the coordinates by  :

:

The equation above is now solved for  . Here the fact is used, that the inverse of 3-by-3 rotation matrices equals the transpose of the matrix:

. Here the fact is used, that the inverse of 3-by-3 rotation matrices equals the transpose of the matrix:

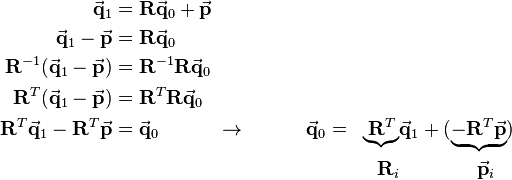

Based upon this the inverse of a homogeneous transformation matrix is defined as:

So instead of using the Adjugate Formula or the Gauß-Jordan-Algorithm to invert a homogeneous transformation matrix, the integrated rotation matrix  has just to be transposed. The transpose is then used for the rotational part of the inverse transformation matrix and its negated product with the translation vector corresponds to the translational part. The following example will give a proof for this definition.

has just to be transposed. The transpose is then used for the rotational part of the inverse transformation matrix and its negated product with the translation vector corresponds to the translational part. The following example will give a proof for this definition.

Consider the transformation matrix Now the definition from above is used to compute the inverse transformation: In the main article about matrix inversion, exactly this inverse matrix |

![\mathbf{T}=

\left[\begin{array}{ccc|c}

& & & \\

& \mathbf{R} & & \vec{\mathbf{p}}\\

& & & \\ \hline

0 & 0 & 0 & 1

\end{array}\right]](/wiki/robotics/images/math/1/3/2/1327593f05795df92e5c12f1a1a27f84.png)

![\mathbf{T}^{-1}=

\left[\begin{array}{ccc|c}

& & & \\

& \mathbf{R} & & \vec{\mathbf{p}}\\

& & & \\ \hline

0 & 0 & 0 & 1

\end{array}\right]^{-1}=

\left[\begin{array}{ccc|c}

& & & \\

& \mathbf{R}_i & & \vec{\mathbf{p}}_i\\

& & & \\ \hline

0 & 0 & 0 & 1

\end{array}\right]=

\left[\begin{array}{ccc|c}

& & & \\

& \mathbf{R}^T & & -\mathbf{R}^T\vec{\mathbf{p}}\\

& & & \\ \hline

0 & 0 & 0 & 1

\end{array}\right]](/wiki/robotics/images/math/7/f/b/7fbdbf0c44d5f9e1ed43f0f611797bf0.png)

that is introduced in the script on page 3-61 and already used as example for

that is introduced in the script on page 3-61 and already used as example for ![^R\mathbf{T}_N =

\left[\begin{array}{ccc|c}

& & & \\

& \mathbf{R} & & \vec{\mathbf{p}}\\

& & & \\ \hline

0 & 0 & 0 & 1

\end{array}\right]=

\left[\begin{array}{cccc}

0 & 1 & 0 & 2a\\

0 & 0 & -1 & 0\\

-1 & 0 & 0 & 0\\

0 & 0 & 0 & 1

\end{array}\right] \quad\rightarrow\quad \mathbf{R}=\left[\begin{array}{ccc}

0 & 1 & 0 \\

0 & 0 & -1\\

-1 & 0 & 0\\

\end{array}\right], \quad \vec{\mathbf{p}}=\left[\begin{array}{c}

2a\\

0\\

0

\end{array}\right]](/wiki/robotics/images/math/c/1/7/c17d376fffc9b1880facddffe9f6e3f6.png)

![\begin{align}

\mathbf{R}^T&=

\left[\begin{array}{ccc}

0 & 0 & -1 \\

1 & 0 & 0\\

0 & -1 & 0\\

\end{array}\right] \quad\rightarrow\quad -\mathbf{R}^T\vec{\mathbf{p}}=

-\left[\begin{array}{ccc}

0 & 0 & -1 \\

1 & 0 & 0\\

0 & -1 & 0\\

\end{array}\right]

\left[\begin{array}{c}

2a\\

0\\

0

\end{array}\right]=

-\left[\begin{array}{c}

0\\

2a\\

0

\end{array}\right] =

\left[\begin{array}{c}

0\\

-2a\\

0

\end{array}\right]\\

^R\mathbf{T}_N^{-1} &=

\left[\begin{array}{ccc|c}

& & & \\

& \mathbf{R}^T & & -\mathbf{R}^T\vec{\mathbf{p}}\\

& & & \\ \hline

0 & 0 & 0 & 1

\end{array}\right]=

\left[\begin{array}{cccc}

0 & 0 & -1 & 0\\

1 & 0 & 0 & -2a\\

0 & -1 & 0 & 0\\

0 & 0 & 0 & 1

\end{array}\right]

\end{align}](/wiki/robotics/images/math/f/a/c/fac6224f0efc59a6ccd061420b096e25.png)

has already been prooven to be the correct inverse of

has already been prooven to be the correct inverse of